This article was originally published on 15th May 2025 and was written by a former employee of Netizen eXperience, Tey Swan Ling.

In the first two installments of this series, Swan Ling established Gemini Advanced as a capable research sidekick for her internal digital wallet study. Starting with Phase 1, she utilized the CARE framework to generate comprehensive research plans, proving that detailed, structured prompting is key to effective AI collaboration. Phase 2 saw the focus shift to logistics, where Gemini streamlined the recruitment process by drafting tailored invitation messages and organizing interview schedules directly within Google Sheets. Now, the experiment moves from preparation to execution, exploring how Gemini handles the complexities of fieldwork.

Phase 3: Fieldwork and Testing – A Collaborative Effort

Now, we move on to Phase 3, a crucial stage that involves taking our research plan out into the real world. This phase centers around two main activities, which I'll discuss individually: creating the discussion guide and the fieldwork, or conducting the user interviews.

Phase 3.1: Crafting the Discussion Guide with AI – A Collaborative Creation

The discussion guide, typically the roadmap towards gathering user insights, demands careful development. In our manual process, we typically take around two to four working days from creating, reviewing, and finalizing the discussion guide before client walkthrough.

This is where AI’s promise of speed, efficiency and creativity could be evaluated. In short, Gemini proved to me what it could do – it was significantly faster, more efficient, and a valuable partner for brainstorming and exploring different perspectives to prepare a thoughtful discussion guide.

Time-saving: Enhanced Efficiency and Accelerated Initial Drafts

This phase truly highlighted Gemini’s capabilities, particularly in terms of speed. I began by tasking Gemini to create a discussion guide for my user interview. Impressively, it promptly delivered a first draft, providing a solid foundation and the basic structure needed to get started. This rapid generation clearly demonstrates a significant time advantage over our typical three-day manual process.

However, this initial speed came with a caveat: the draft was somewhat generic, lacking the specific focus required to deeply understand financial behavior and payment methods needed for a holistic view of potential user personas.

Refinement Process: An Iterative Process with Human Expertise

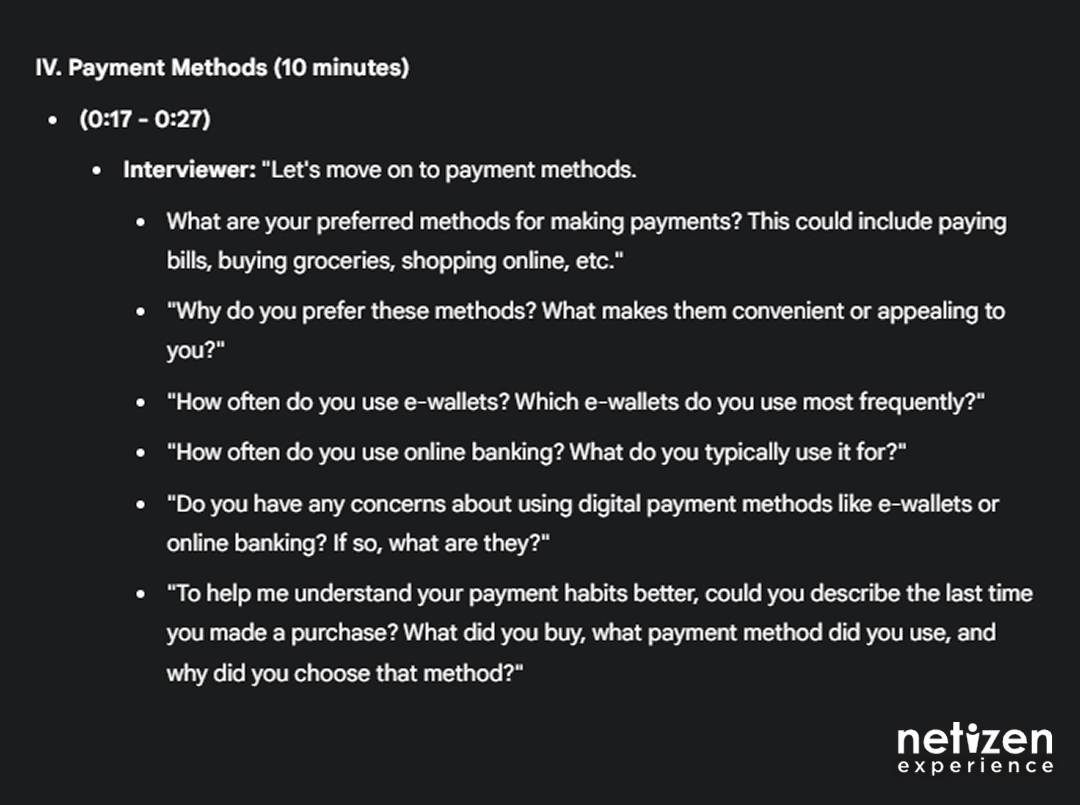

Hence, I put on my UX researcher hat to refine the discussion guide to my research needs, which involved multiple rounds of interaction with Gemini. This includes:

- Adding objectives:

- Recognizing that Gemini did not automatically include them, I prompted it to define clear objectives for each question, ensuring every inquiry directly contributed to our research goals.

- Prompt example: “Please include an objective for each question asked.”

- Targeting the inquiry:

- I further refined the guide by focusing Gemini on specific areas. For example, I narrowed the scope to prioritize payment method preferences and cash usage over general financial goals.

- Prompt example: “Can you modify the 30-minute discussion guide to focus on payment methods for daily expenses and minimize the section on general financial behavior/goals? I want to include questions about payment method types, preferences and reasons, top 3 rankings, purpose of using a specified payment method (if any), influencing factors, and questions relating to cash usage.

- Incorporating follow-up questions

- To ensure comprehensive and consistent data across all interviews, I instructed Gemini to suggest potential follow-up and situational questions based on anticipated responses.

- Prompt example: "Can you craft out potential follow-up questions accordingly, as well as situational ones such as if the user says yes or no?

- Estimating durations:

- To adhere to the 30-minute interview limit, I also asked Gemini to estimate the time allocation for each section.

- Prompt example: “Please specify estimated time spent in each section as well.”

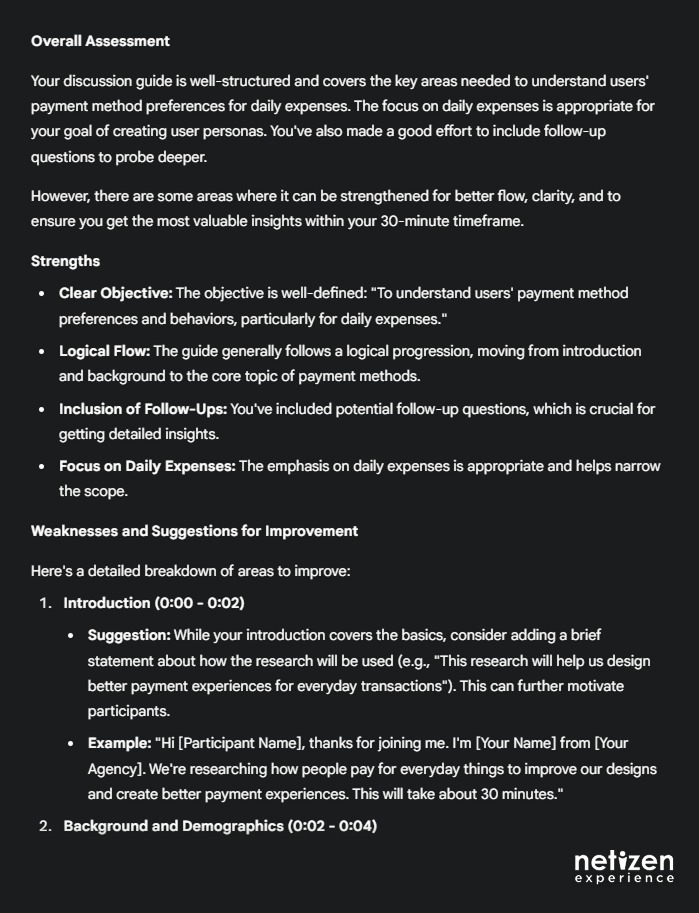

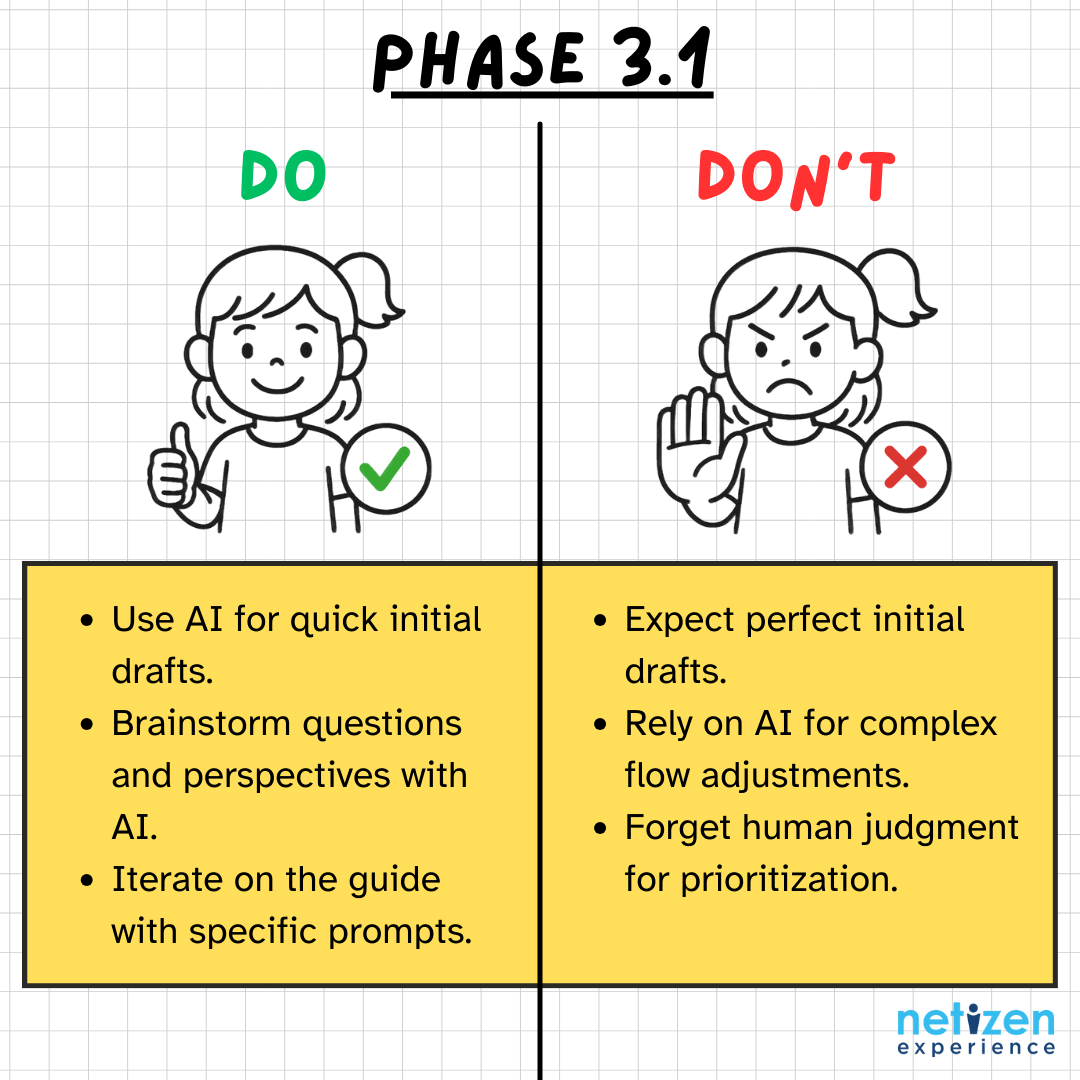

The discussion guide improved significantly after several rounds of refinement. However, I ultimately stepped in to manually adjust the question order and flow for a more natural conversation flow. This manual touchpoint made more sense because Gemini struggled to precisely integrate revisions of specific questions into the whole discussion guide.

It became more cumbersome and time-consuming than simply tweaking the sequence of the questions myself. After I was satisfied with my manual flow adjustment, the final step was to have Gemini review the entire guide, acting as an experienced UX consultant to provide comprehensive feedback.

By now, I’m impressed by Gemini's help and can see it as a valuable tool in my workflow. The key benefit was not the reduced work, but a shift: from manual creation to strategic thinking to refine AI's output. Instead of starting from scratch, I could focus my expertise on reviewing and aligning Gemini’s suggestions with our standard practices and research goals.

However, this experience also highlighted a crucial point: AI's effectiveness heavily depends on the UX researcher's expertise in guiding and evaluating its output.

Human Judgment Remains Key

This refinement process is a good reminder that while Gemini can offer valuable starting points and suggestions, the expertise and skills of a UX researcher remains crucial for effective use of AI.

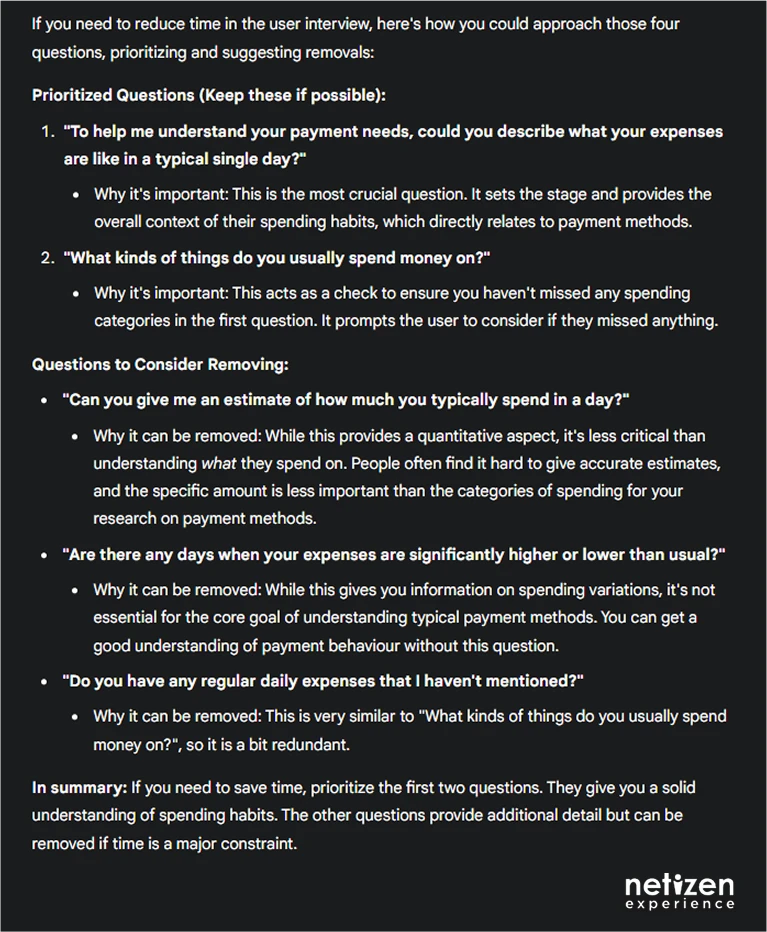

For example, despite Gemini's estimated timings, my research experience suggested the session could potentially overrun, just based on the number of questions. So, I prompted it to further prioritize or cut questions based on research goals. While its reasoning was helpful, the final decision on what to include rested with me.

Phase 3.2: Fieldwork – Gemini’s Contextual Awareness

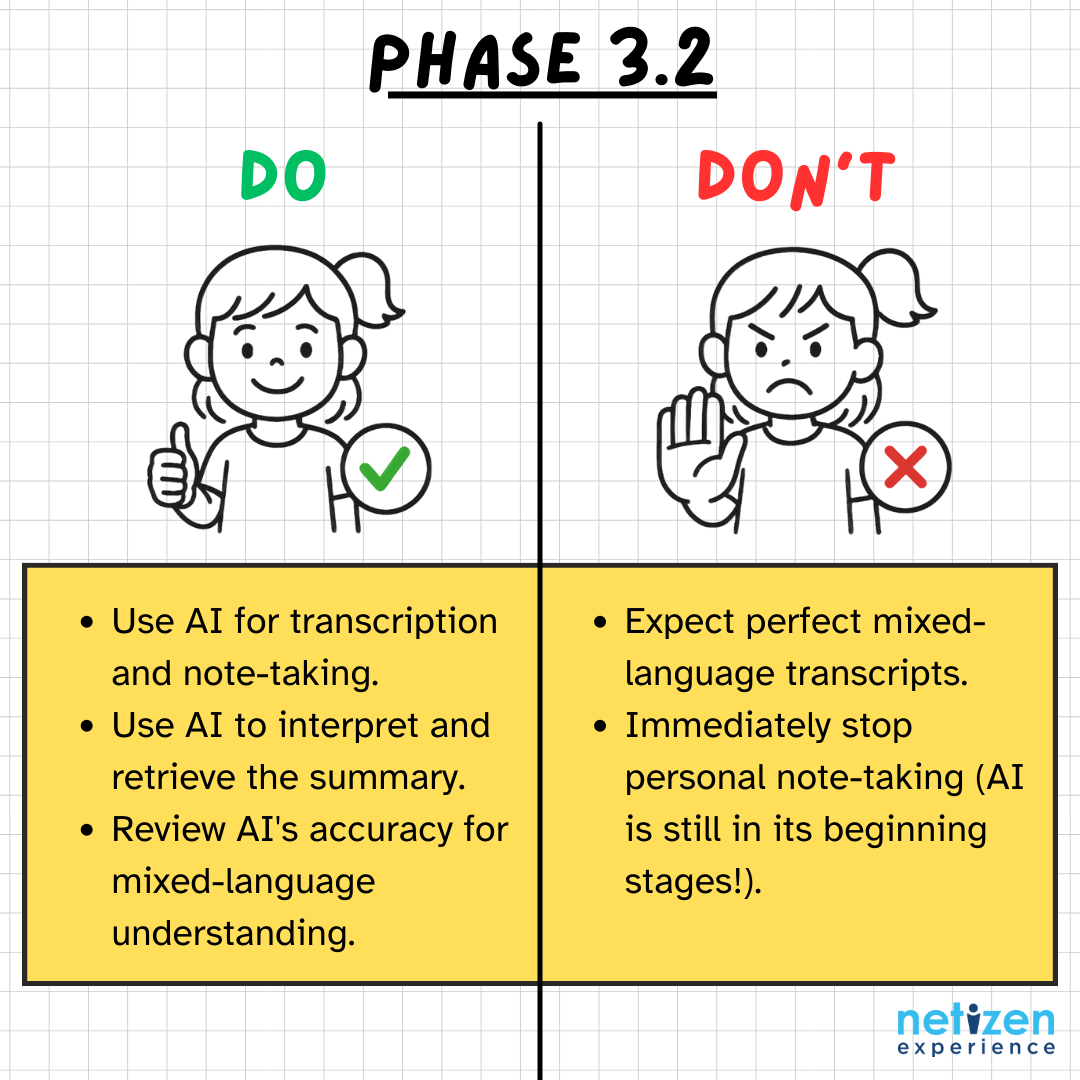

With the discussion guide finalized, we move on to the most exciting (personal opinion!) phase – fieldwork! Or the direct execution of user interviews. While my direct interaction with AI during the fieldwork sessions were minimal, my digital sidekick, Google Meet, subtly worked in the background. I was utilizing Google Meet’s note-taking and transcription features (powered by AI) to capture every detail as my project partner.

Your Silent Ally: AI-Powered Transcription and Note-Taking

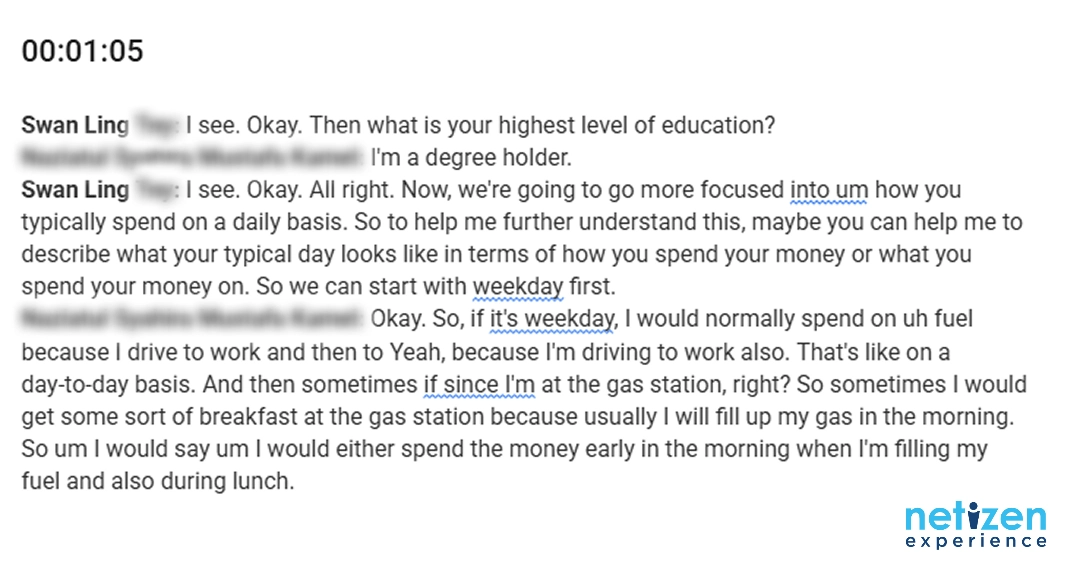

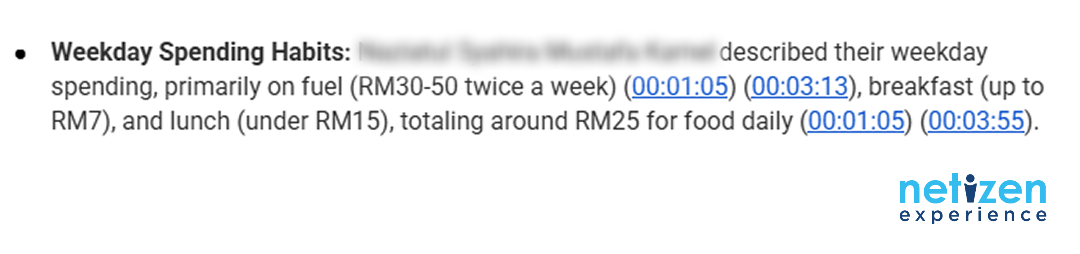

Google Meet's transcription feature was a game-changer, providing both an accurate summary and a detailed word-for-word transcript for each interview, which are foundational for the synthesis and analysis phase to build detailed user persona(s).

This capability also points to the potential for reduced manpower, a significant benefit during this fieldwork stage where the moderator's direct engagement is the most crucial element. While there’s a waiting period of about 10-15 minutes for the AI-powered recording, transcription, and summary to be ready—potentially longer depending on your interview’s duration—it is a small trade-off given the valuable insights you gain from directly engaging with the users.

AI-Powered Contextual Understanding in Post-Interview Analysis

An additional pleasant discovery was AI's ability to understand Malaysian's habit of mixing multiple languages within a single sentence.

As a company based in Malaysia, which is a multicultural and multilingual country where “bahasa campur” (mixed language) is a common way of speaking, we're used to blending words from Malay, English, and sometimes other languages in everyday conversations. Curious to see how well Google Meet’s transcription could handle this, I intentionally asked a colleague to mix Malay words into their interview responses.

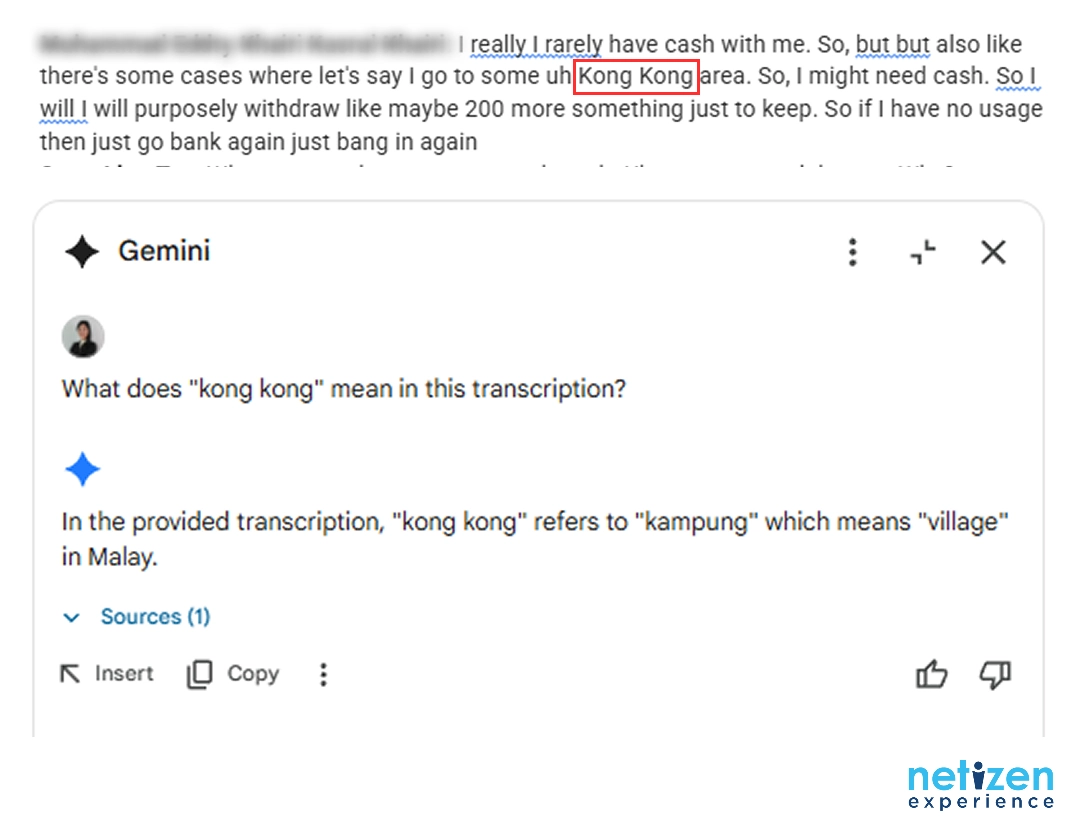

Surprisingly, while Google Meet's transcription struggled and transcribed some Malay words into gibberish, rendering kampung (village) as “kong kong”, and pisang goreng (banana fritters) as “goring pis”, Gemini was able to infer the intended meaning in its summary. For instance, it correctly picked up that my participant was referring to rural areas, despite the error in the transcript being written as “kong kong”. This discovery was indeed a nice surprise, demonstrating Gemini’s contextual understanding of mixed-language communication, a reality deeply embedded in the Malaysian experience.

The Mindset Shift: Are We Ready to Move to AI-Assisted Research?

Honestly, while Google Meet's transcription, note-taking, and summary features proved useful in this phase, the biggest hurdle in this phase was more of a personal one: trusting AI enough to truly consider it a project partner. Despite its capabilities, I still felt compelled to take my own notes – though admittedly, part of that was for the purpose of this article to evaluate and compare with the usual manual process. It made me wonder, are we truly ready to fully trust AI in its capacity?

On a personal note, the crucial factors are accurate data and easy retrieval of individual participant responses. If AI can deliver on these, I see no issue in making it my primary note-taker. This emergence of AI is a real opportunity for UX researchers to demonstrate their adaptability and embrace what could be a significantly improved workflow.

Missed the prep work? Catch up on Part 1 (Planning) and Part 2 (Recruitment), and stay tuned for the final installment where we break down the data in Part 4!